Clustering Illusion Example

Join My FREE Coaching Program - 🔥 PRODUCTIVITY MASTERMIND 🔥Link - 👈 Inside the Program: 👉 WEEKLY LIVE. ทำความเข้าใจกับ Clustering Illusion. Survivorship Bias Survivorship Bias Survivorship Bias. This is what the clustering illusion is—seeing significant patterns or clumps in data that are really random. Another example of the clustering illusion occurred during World War II, when the Germans bombed South London. Some areas were hit several times and others not at all. The clustering illusion is the tendency to erroneously consider the inevitable 'streaks' or 'clusters' arising in small samples from random.

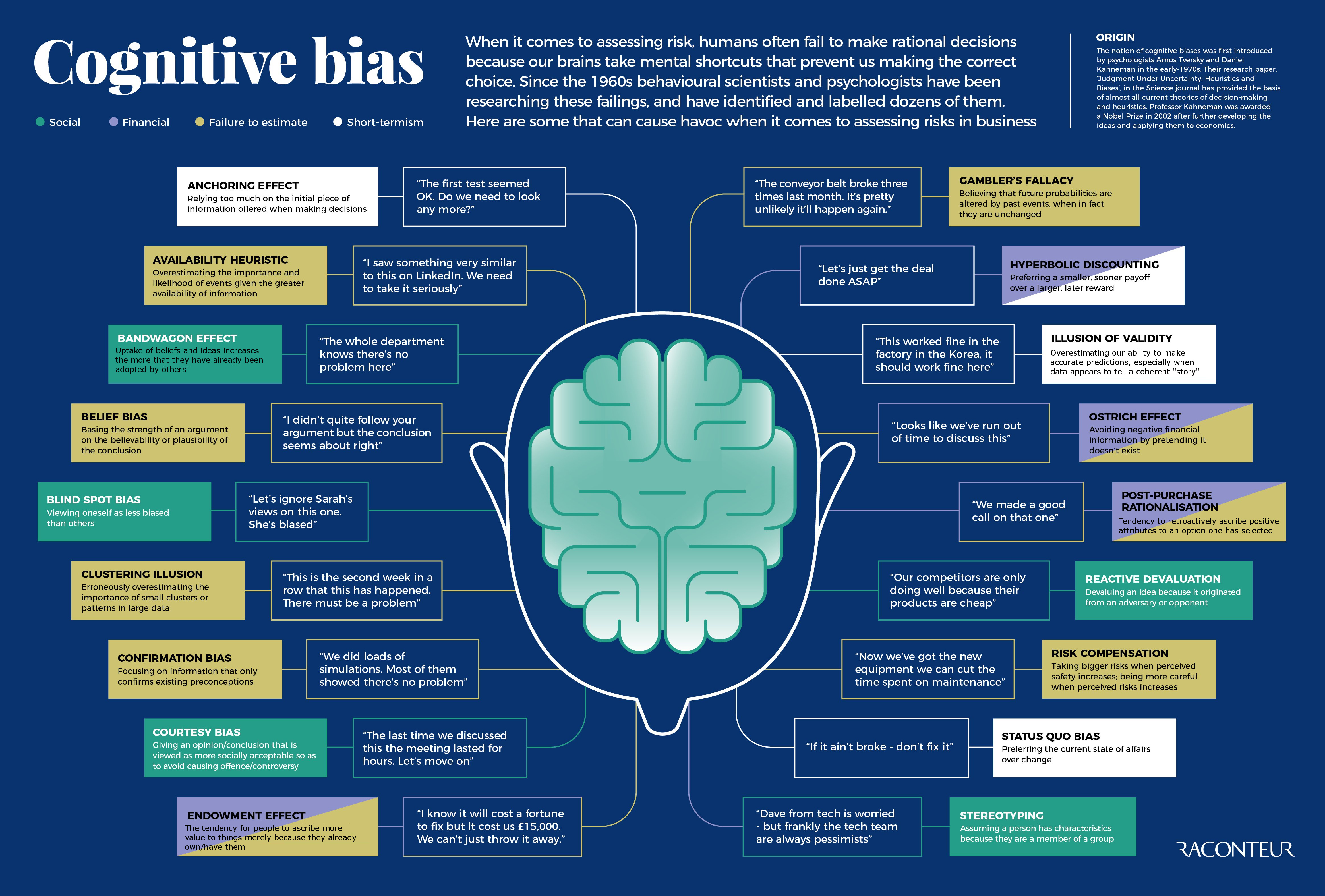

Understanding people and events, collecting data and evidence, and formulating perspectives on events – investigation requires a considerable amount of reasoning on the part of individuals and teams. Investigators need to be aware of the cognitive biases that might impact their own decision-making process as well as their peers. If an investigator forms a theory behind an incident and is unwilling to change their view to account for new and contradictory evidence, this is an example of cognitive bias.

Cognitive biases are patterns of deviation from rational thinking. These can apply to any scenario, whether in daily interactions with peers or even when making decisions about what to purchase in a store. For investigators, however, cognitive biases can be quite dangerous when investigating a crime as they can lead to gathering the wrong type of evidence, or worse yet, identifying the wrong person responsible for the threat.

The following common cognitive biases are examples of the type of flawed reasoning that might impact an investigation

1. Outcome Bias: Judging a decision based on its outcome

Outcome bias is an error made in evaluating the quality of a decision when the outcome of that decision is already known. For example, an investigator might make use of outcome bias to compel someone to testify by indicating that other witnesses have come forward with similar information.

2. Confirmation Bias: Favoring information that confirms preconceptions

Confirmation bias is the tendency to bolster a hypothesis by seeking evidence consistent with beliefs and preconceptions while disregarding inconsistent evidence. In criminal investigations, preference for hypothesis-consistent information could contribute to false convictions by leading investigators to disregard evidence that challenges their theory of a case.

3. Automation Bias: Favoring automated decision-making

Automation bias is the preference for automated decision-making systems and ignoring contradictory conclusions made without automation, even if they are correct. This type of bias is increasingly relevant for investigators who rely on automated systems. For example, a cybersecurity professional may assume their system is not under threat given the lack of automated alerts, despite concerns from specific individuals who believe they are being targeted by hackers. Automation might also identify a threat that is not actually there. Automation bias would compel investigators to pursue the problem regardless of facts that contradict it, thereby wasting valuable resources that could be applied elsewhere.

4. Clustering Illusion: Seeing patterns in random events

Clustering illusion is the intuition that random events which occur in clusters are not really random events. An investigator might uncover information that has limited correlation but, given false assumptions about the statistical odds of that correlation, they might suffer from fallacious reasoning. For example, an investigation into a suspect’s online footprint might uncover the person’s name associated with multiple posts with a similar sentiment. However, by looking at additional information and conducting in-person interviews, they could eliminate this bias by determining the posts were made by two different persons.

5. Availability Heuristic: Overestimating the value of information readily available

The availability heuristic is a mental shortcut that relies on immediate examples that come to a given person’s mind when evaluating a specific decision. Heavily publicized risks are easier to recall than a potentially more threatening risk. This impinges on individual perspectives but can also guide public policy.

6. Stereotyping: Expecting a group or person to have certain qualities without having real information about them

Stereotyping involves an over-generalized belief about a particular category of people. Stereotypes are generalized because one assumes that the stereotype is true for each individual person in the category. Stereotypes can be helpful in making quick decisions but they may be erroneous. Stereotypes are said to encourage prejudice. Stereotyping is the key driver behind racial profiling, whereby members of a specific race or ethnicity are associated with crimes typically believed to be perpetrated.

7. Law of the Instrument: Over-reliance on a familiar tool

Law of the instrument, or law of the hammer, is a bias that involves an over-reliance on a familiar tool. As Abraham Maslow said, “I suppose it is tempting, if the only tool you have is a hammer, to treat everything as if it were a nail.” This is especially important for investigators in the digital age. As old behaviors are replaced with new behaviors that intersect with the web, investigators must update their toolkit to be able to investigate crimes. For example, the thriving online drug trade requires narcotics investigators to reexamine the tools and tactics they use to solve a case to gain a better understanding of the people and events involved.

8. Blind-spot Bias: Failing to recognize one’s own biases

Blind-spot bias, while last in our list, should always be top of mind. Investigators must continually assess possible biases that may impact their thinking unconsciously, and make conscious attempts to address them. People tend to attribute bias unevenly so that when people reach different conclusions, they often label one another as biased while viewing themselves as accurate and unbiased.

Great investigators will understand and reflect on these cognitive biases continually during an investigation. Mental noise, wishful thinking – these things happen to the best of us. So remember always: Check your bias.

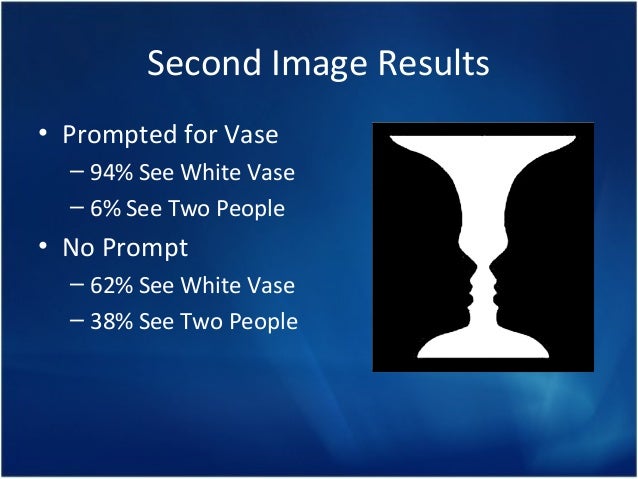

The clustering illusion is the intuition that random events which occur in clusters are not really random events. The illusion is due to selective thinking based on a counterintuitive but false assumption regarding statistical odds. For example, it strikes most people as unexpected if heads comes up four times in a row during a series of coin flips. However, in a series of 20 flips, there is a 50% chance of getting four heads in a row (Gilovich). It may seem unexpected, but the chances are better than even that a given neighborhood in California will have a statistically significant cluster of cancer cases (Gawande).

What would be rare, unexpected, and unlikely due to chance would be to flip a coin twenty times and have each result be the alternate of the previous flip. In any series of such random flips, it is more unlikely than likely that short runs of 2, 4, 6, 8, etc., will yield what we know logically is predicted by chance. In the long run, a coin flip will yield 50% heads and 50% tails (assuming a fair flip and a fair coin). But in any short run, a wide variety of probabilities are expected, including some runs that seem highly improbable.

Finding a statistically unusual number of cancers in a given neighborhood--such as six or seven times greater than the average--is not rare or unexpected. Much depends on where you draw the boundaries of the neighborhood. Clusters of cancers that are seven thousand times higher than expected, such as the incidence of mesothelioma in Karian, Turkey, are very rare and unexpected. The incidence of thyroid cancer in children near Chernobyl was one hundred times higher after the disaster (Gawande).

Sometimes a subject in an ESP experiment or a dowser might be correct at a higher than chance rate. However, such results do not indicate that an event is not a chance event. In fact, such results are predictable by the laws of chance. Rather than being signs of non-randomness, they are actually signs of randomness. ESP researchers are especially prone to take streaks of 'hits' by their subjects as evidence that psychic power varies from time to time. Their use of optional starting and stopping is based on the presumption of psychic variation and an apparent ignorance of the probabilities of random events. Combining the clustering illusion with confirmation bias is a formula for self-deception and delusion. For example, if you are convinced that your husband's death at age 72 of pancreatic cancer was due to his having worked in a mill when he was younger, you may start looking for proof and run the danger of ignoring any piece of evidence that contradicts your belief.

A classic study was done on the clustering illusion regarding the belief in the 'hot hand' in basketball (Gilovich, Vallone, and Tversky). It is commonly believed by basketball players, coaches and fans that players have 'hot streaks' and 'cold streaks.' A detailed analysis was done of the Philadelphia 76ers shooters during the 1980-81 season. It failed to show that players hit or miss shots in clusters at anything other than what would be expected by chance. They also analyzed free throws by the Boston Celtics over two seasons and found that when a player made his first shot, he made the second shot 75% of the time and when he missed the first shot he made the second shot 75% of the time. Basketball players do shoot in streaks, but within the bounds of chance. It is an illusion that players are 'hot' or 'cold'. When presented with this evidence, believers in the 'hot hand' are likely to reject it because they 'know better' from experience. And cancers do occur in clusters, but most clusters do not occur at odds that are statistically alarming and indicative of a local environmental cause.

Clustering Illusion Example

In epidemiology, the clustering illusion is known as the Texas-sharpshooter fallacy (Gawande 1999: 37). Khaneman and Tversky called it 'belief in the Law of Small Numbers' because they identified the clustering illusion with the fallacy of assuming that the pattern of a large population will be replicated in all of its subsets. In logic, this fallacy is known as the fallacy of division, assuming that the parts must be exactly like the whole.

Clustering Illusion Example

further reading

Gawande, Atul. 'The Cancer-Cluster Myth,' The New Yorker, February 8, 1999, pp. 34-37.

Clustering Illusion Bias

Gilovich, Thomas. How We Know What Isn't So: The Fallibility of Human Reason in Everyday Life (New York: The Free Press, 1993).

Tversky, A. and D. Khaneman (1971). 'Belief in the law of small numbers,' Psychological Bulletin, 76, 105-110.

Human Tendency To See Patterns

Last updated 13-Jan-2014